In this case, the domain embeddings collapse to a region without recovering any sensible structure. However, the fashion attribute tasks on iMaterialist all share the same domain and only differ in their labels. INaturalist tasks from iMaterialist tasks due to differences in the two problemĭomains.

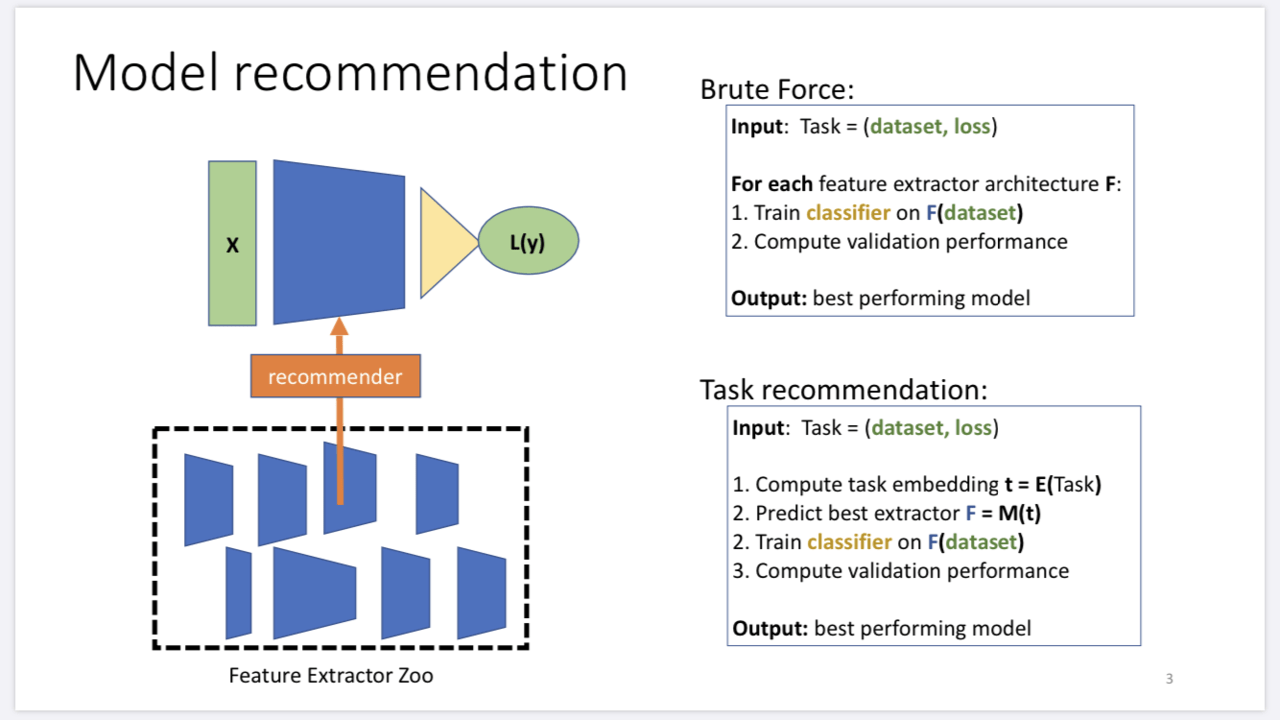

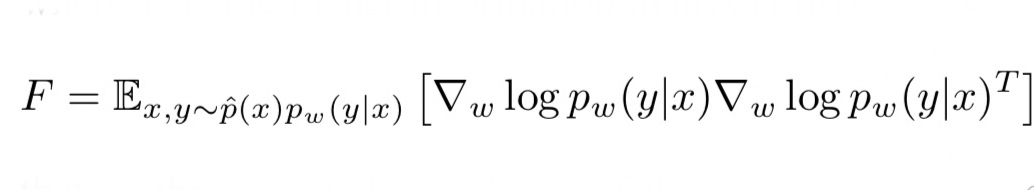

(Right) T-SNE visualization of the domain embeddings (using mean feature activations) for the same tasks. For example, the tasks of jeans (clothing type), denim (material) and ripped (style) recognition are close in the task embedding. Notice that some tasks of similar type (such as color attributes) cluster together but attributes of different task types may also mix when the underlying visual semantics are correlated. iMaterialist is well separated from iNaturalist, as it entails very different tasks (clothing attributes). Notice that the bird classification tasks extracted from CUB-200 embed near the bird classification task from iNaturalist, even though the original datasets are different. Colors indicate ground-truth grouping of tasks based on taxonomic or semantic types. (Left) T-SNE visualization of the embedding of tasks extracted from the iNaturalist, CUB-200, iMaterialist datasets. Figure 1: Task embedding across a large library of tasks (best seen magnified). Selecting a feature extractor with task embedding obtains a performance close to the best available feature extractor, while costing substantially less than exhaustively training and evaluating on all available feature extractors. We present a simple meta-learning framework for learning a metric on embeddings that is capable of predicting which feature extractors will perform well. We also demonstrate the practical value of this framework for the meta-task of selecting a pre-trained feature extractor for a new task. We demonstrate that this embedding is capable of predicting task similarities that match our intuition about semantic and taxonomic relations between different visual tasks ( e.g., tasks based on classifying different types of plants are similar). This provides a fixed-dimensional embedding of the task that is independent of details such as the number of classes and does not require any understanding of the class label semantics. Given a dataset with ground-truth labels and a loss function defined over those labels, we process images through a “probe network” and compute an embedding based on estimates of the Fisher information matrix associated with the probe network parameters.

TASK2VEC HOW TO

0 kommentar(er)

0 kommentar(er)